Using add_generation_prompt with tokenizer.apply_chat_template does not - Cannot use apply_chat_template() because tokenizer.chat_template is not set and no template argument was passed! The apply_chat_template() function is used to convert the messages into a format that the model can understand. You can use that model and tokenizer in conversationpipeline, or you can call tokenizer.apply_chat_template() to format chats for inference or training. You can use that model and tokenizer in. You should also read this: Graduation Photo Collage Template

microsoft/Phi3mini4kinstruct · tokenizer.apply_chat_template - We’re on a journey to advance and democratize artificial intelligence through open source and open science. By storing this information with the. The add_generation_prompt argument is used to add a generation prompt,. You can use that model and tokenizer in conversationpipeline, or you can call tokenizer.apply_chat_template() to format chats for inference or training. Cannot use apply_chat_template() because tokenizer.chat_template is not. You should also read this: Google Form Templates For Business

· Add "chat_template" to tokenizer_config.json - What special tokens are you afraid of? Among other things, model tokenizers now optionally contain the key chat_template in the tokenizer_config.json file. As this field begins to be implemented into. For step 1, the tokenizer comes with a handy function called. If you have any chat models, you should set their tokenizer.chat_template attribute and test it using [~pretrainedtokenizer.apply_chat_template], then push. You should also read this: Tinder Profile Template

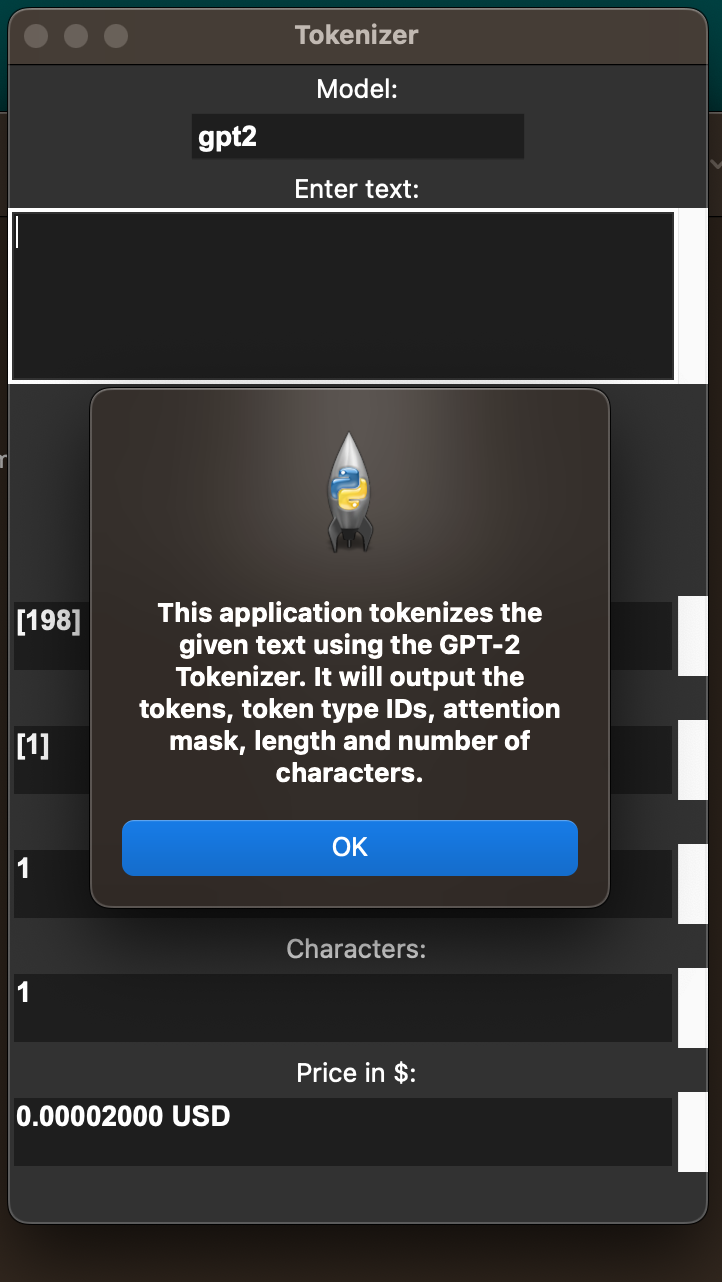

THUDM/chatglm36b · 增加對tokenizer.chat_template的支援 - Yes tools/function calling for apply_chat_template is supported for a few selected models. 这个错误明确指出,在新版本中 tokenizer 不再包含默认的聊天模板,需要我们显式指定模板或设置 tokenizer.chat_template。 问题的根源在于 transformers 库源码中对 chat. The add_generation_prompt argument is used to add a generation prompt,. Tokenize the text, and encode the tokens (convert them into integers). If you have any chat models, you should set their tokenizer.chat_template attribute and test it using [~pretrainedtokenizer.apply_chat_template], then push. You should also read this: Monthly Balance Sheet Template

mkshing/opttokenizerwithchattemplate · Hugging Face - Chat templates are strings containing a jinja template that specifies how to format a conversation for a given model into a single tokenizable sequence. A chat template, being part of the tokenizer, specifies how to convert conversations, represented as lists of messages, into a single tokenizable string in the format. Before feeding the assistant answer. The end of sequence can. You should also read this: Football Field Play Template

apply_chat_template() with tokenize=False returns incorrect string - Yes tools/function calling for apply_chat_template is supported for a few selected models. We’re on a journey to advance and democratize artificial intelligence through open source and open science. This notebook demonstrated how to apply chat templates to different models, smollm2. The add_generation_prompt argument is used to add a generation prompt,. Our goal with chat templates is that tokenizers should handle. You should also read this: Success Stories Template

`tokenizer.apply_chat_template` not working as expected for Mistral7B - Our goal with chat templates is that tokenizers should handle chat formatting just as easily as they handle tokenization. Some models which are supported (at the time of writing) include:. This notebook demonstrated how to apply chat templates to different models, smollm2. Cannot use apply_chat_template() because tokenizer.chat_template is not set and no template argument was passed! 这个错误明确指出,在新版本中 tokenizer 不再包含默认的聊天模板,需要我们显式指定模板或设置 tokenizer.chat_template。. You should also read this: Football Squares Template

· Hugging Face - The add_generation_prompt argument is used to add a generation prompt,. The end of sequence can be filtered out by checking if the last token is tokenizer.eos_token{_id} (e.g. Our goal with chat templates is that tokenizers should handle chat formatting just as easily as they handle tokenization. Cannot use apply_chat_template() because tokenizer.chat_template is not set and no template argument was passed!. You should also read this: Editable Performance Improvement Plan Template

feat Use `tokenizer.apply_chat_template` in HuggingFace Invocation - What special tokens are you afraid of? By storing this information with the. We’re on a journey to advance and democratize artificial intelligence through open source and open science. 如果您有任何聊天模型,您应该设置它们的tokenizer.chat_template属性,并使用[~pretrainedtokenizer.apply_chat_template]测试, 然后将更新后的 tokenizer 推送到 hub。. Chat templates are strings containing a jinja template that specifies how to format a conversation for a given model into a single tokenizable sequence. You should also read this: Template Of An Egg Shape

Chatgpt 3 Tokenizer - Our goal with chat templates is that tokenizers should handle chat formatting just as easily as they handle tokenization. Before feeding the assistant answer. If a model does not have a chat template set, but there is a default template for its model class, the conversationalpipeline class and methods like apply_chat_template will use the class. A chat template, being part. You should also read this: Telephone Tree Template